軟體測試中, 最直覺的測試方法就是直接操作使用者介面, 然後觀察介面上的回應是否符合預期.

舉例來說, 要測試小算盤這個程式有沒有問題.

就是打開小算盤, 按"3+4=",

如果螢幕上顯示7. 那代表運作正常.

開發團隊為了節省回歸測試 (Regression test) 的成本, 想導入自動化測試.

寫了一隻程式去點3那個按鈕, 再依序點+, 4, =等按鈕.

最後檢查輸出欄是否為7.

測試完加法運算, 還有減, 乘, 除, 開平方等功能需要測試.

辛苦地完成了所有的測試程式後 (可能幾百項到幾千項),

某一天產品經理說: "這個使用者介面不夠炫, 為了市場需要做些調整."

當使用者介面改變後, 測試程式還能運作嗎?

看情形, 大部分的情況下都是需要調整的. 只是改得多改得少而已.

你可以想像當測試項很多時, 一個個地修改需要耗費的時間與精力.

更糟的是, 一個月後產品經理又說: "上次修改的部分不是很受市場歡迎, 讓我們再調整一次."

根據上面的情況, 讓我們思考一下.

1. 使用者介面常常改變嗎?

跟產品底層的邏輯比起來, 使用者介面改變的頻率高很多. 可能顧客反應使用起來不夠直覺, 或是現有的設計上有漏洞, 也有可能因為公司高層一句話說改就改了.

還一種狀況是, 在開發軟體的下一個版本時, 為了讓使用者感受前後版本有所不同, 通常也會調整使用者介面.

資源有限的情況下, 自動化測試因為介面的改變而沒辦法累積下去是很可惜的.

2. 我們是不是可以把自動化測試包裝的好一些?

是的, 這能應付某種程度的使用者介面改變.

像是用關鍵字將事件抽象化, 把使用者介面的操作包裝在關鍵字內.

但抽象化也不是萬靈丹, 如果介面的改變超過了本來關鍵字涵蓋的範圍, 還是要針對每一個測試項去改.

3. 既然使用者介面常常改變, 可以只做單元 (Unit) 或是API的自動化測試嗎?

這二種測試不受使用者介面改變的影響, 聽起來很棒不是嗎?

但相對地, 也無法測試到使用者介面上的臭蟲.

可能每次自動化測試都有通過, 但顧客拿到產品後一打開使用者介面就馬上當掉.

對整個產品的品質來說, 還是有些風險.

到底使用者介面上的自動化測試值不值得投資?

這類型的測試開發成本很高, 但似乎又不應該完全放棄.

針對這個問題, Mike Cohn的部落格有一篇很棒的文章.

"自動化測試金字塔中被遺忘的一層"

(The Forgotten Layer of the Test Automation Pyramid)

|

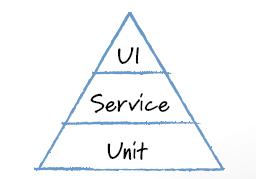

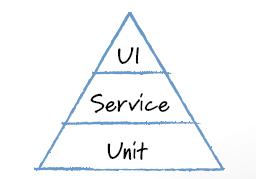

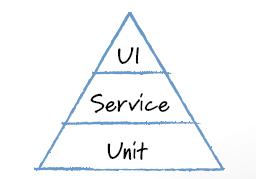

| 自動化測試金字塔 |

金字塔最底層的部分是單元測試, 這塊面積最大; 面積的大小代表理想的自動化測試程式組成比例.

單元測試是所有測試的基礎. 產品由一堆小單元組成, 只有當每個單元都各自運作良好時, 產品才能運作正常. 大量的單元測試確保了大部分的元件都能夠運作. 如果單元測試沒有通過, 因為每個單元都很小, 所以除錯並不困難; 從10行程式碼中找出問題一定是比從1000行容易得多.

最上層的部分是使用者介面測試, 對於某些沒有介面的系統, 也可以是端到端測試 (End-to-end testing).

即使元件都各自運作良好, 互相整合的部分仍可能有問題, 使用者介面本身也可能有臭蟲, 因此仍然需要測試程式確保這部分的品質. 但考量前面所提到的種種因素, 這種測試程式開發及維護的成本都很高; 當維修成本過高時, 整個測試團隊可能會一直原地打轉, 不停地維護相同的程式. 因此, 建議挑一些代表性的測試項自動化就好, 不需要所有的測試都透過使用者介面執行.

中間被遺忘的一層是服務, 換句話說就是系統提供的功能.

舉例來說, 小算盤提供的服務就是加減乘除等運算.

事實上產品主要的邏輯都在這層, 使用者介面應該只是把運算結果顯示到螢幕上而已.

若開發團隊能提供一個跨過使用者介面的測試介面, 像是可以從命令列下指令, 或直接發送通訊協定給伺服器, 再從產品的紀錄去驗證執行的結果. 一樣可以測試到產品中元件整合的部分.

這類型的測試還有幾個好處:

- 跨過使用者介面測試的速度會更快.

- 不需等待使用者介面的開發完成, 早期測試. 使用者介面的開發可能會比底層服務晚, 倘若一定要等到使用者介面開發完才能開始測試, 是很沒效益的.

結論:

各類型的測試都有優缺點. 適當的比例是成功的關鍵.

使用者介面的自動化測試仍然有存在的價值, 但為了讓維護成本合理化, 選擇最重要的部分開發就好.

----------------------------------

In software testing, the most intuitive way is to operate GUI (Graphic user interface) directly, and observe if the response of interface is expected.

For example, we want to know if the calculator works correctly.

One test case should be that:

- Launch the calculator.

- Press the buttons "3+4=".

- Verify if output is "7" on the screen.

The development team wants to introduce automation test to save regression effort.

They write a program to click "3", "+", "4", and "=" buttons, and to assert if the output text is "7".

Besides addition, there are as well as subtraction, multiplication, division, and square root needed to be tested.

After developing all test programs laboriously (there are maybe hundreds or thousands of test cases). One day, the project manager says, "Well... this UI isn't cool enough. We should adjust it for marketing."

Can test programs work properly after the interface changes?

It depends. But in most cases, we need to tune test programs.

Please imagine the effort of tuning every program respectively when the case number is considerable.

What's worse, the project manager changes his mind again one month later. "Customers didn't like the modification. Let's change to another interface."

According to the scenario above, we can think about that:

1. Does User Interface Change Frequently?

User interface changes more frequently than fundamental program logic. It may change because it isn't intuitive enough for users, because there are bugs in current design, or because the boss just doesn't like it.

When developing the next version of the product, in order to make customers feel novel and in a visual sense, the GUI is adjusted, too.

With limited resources, it is a pity that test automation can't be accumulated because of GUI changes.

2. Can Test Automation be Wrapped Better?

Yes, it helps in certain levels of GUI changes.

We can use keywords to abstract testing scenarios and reduce the impact of changes.

However, abstraction is not a silver bullet. If the change is beyond the range of keywords, we still need to modify testing program respectively.

3. Since User Interface Changes aren't Avoidable, Can We Only Invest Resources in Unit or API Level Testing?

They aren't impacted by GUI changes. Sounds great!

Nevertheless, they can't find the bugs in GUI.

Even though all automation tests are passed, user interface may crash immediately when it is launched.

It is risky if GUI automation testing is given up.

Is it worth investment in GUI automation?

This kind of testing takes lots of effort, but it can't be skipped.

There is an excellent article by Mike Cohn to discuss this problem.

"The Forgotten Layer of the Test Automation Pyramid"

|

| Test Automation Pyramid |

At the base of the test automation pyramid is unit testing. Unit testing should be the foundation of a solid test automation strategy and such represents the largest of the pyramid.

The product is composed of units. Only if every unit works correctly, the product can work correctly, too. Lots of unit testing makes sure the quality of units. Once unit testing fails, because its testing scope is small and specific, trouble shooting is easy. Debugging in 10 lines is much easier than in 1000 lines.

The top is the user interface level. For systems without GUI, it means end-to-end testing.

Although every unit works as expected, there are still integration problems, and user interface itself may have bugs. It is better to have test program to cover this type of testing. As discussed above, the cost of development and maintenance is expensive, and the team goes round in circles if they are always busy maintaining existing test program. It is suggested that we implement the most important test cases by this way, but not all cases.

The layer between UI and unit is the service layer. In other words, it is the functionality the product provides. For example, the service of the calculator is mathematical operations.

Main program logic is in this layer, and user interface is just a presentation layer to display computed results. If the development team can provide testing interface bypass GUI, such as a command line, or a socket server to listen requests, and as well as provide comprehensive logs or system events for verifying the expected results, integration code can be tested with reasonable efforts.

There are some benefits of service testing, too.

- Testing speed is faster by bypassing GUI.

- Testing doesn't depend on user interface, so it is possible to test in the early stage.

User interface is generally finished later than main program service. It is inefficient to test after everything is ready.

Conclusion:

Every type of testing has its own importance. The appropriate ratio of testing is the key to success.

GUI automation is useful to make sure the product quality. However, to reduce maintenance efforts, we just need to implement the most important test cases.